Let me set the scene for you. It’s October 2005 and I (Peter) have just been promoted to Program Manager for my team of 25. After listening to a one-hour introduction to Scrum by Jeff Sutherland, I’m wondering if this Scrum thing might work for our team. After taking Ken Schwaber’s two-day Certified Scrum Master class, I’m 100% convinced that Scrum will be the best thing to happen to our team since version control. We adopted Scrum and were off to the races.

Well, mostly off to the races. We were just about to start building a 1.0 audio application (what eventually shipped as Adobe Soundbooth). In the first sprint, starting from scratch, we delivered a releasable increment of the product, and, as Scrum is built to do, we learned what parts of our process didn’t fit well in a short timeboxed iteration. No problem, right? That’s what retrospectives are for!

Our first retrospective was mostly a celebration. After all, we had adopted a pretty radically different approach to building products. We had successfully delivered a few features in one sprint, worked through some tough architectural decisions and gained some initial buy-in from some of the more skeptical members of the team — and no one was harmed in the process! Sure, some features the team had pulled into Sprint 1 didn’t get all the way done, but that was to be expected in our first sprint or two, right? There’s lots of learning in the early sprints.

By the end of Sprint 8, we were getting pretty frustrated. We had now tried several different approaches to getting the work all the way “done” by the end of a sprint, and nothing was working. (Hint: Breaking the sprint into traditional development phases didn’t work. Neither did focusing on testing speed or efficiency or automation). We consistently ran out of time to finish testing for all of the features that had started development. Some team members were making the argument that this Scrum stuff just doesn’t work in our context.

Learning from old burndown charts

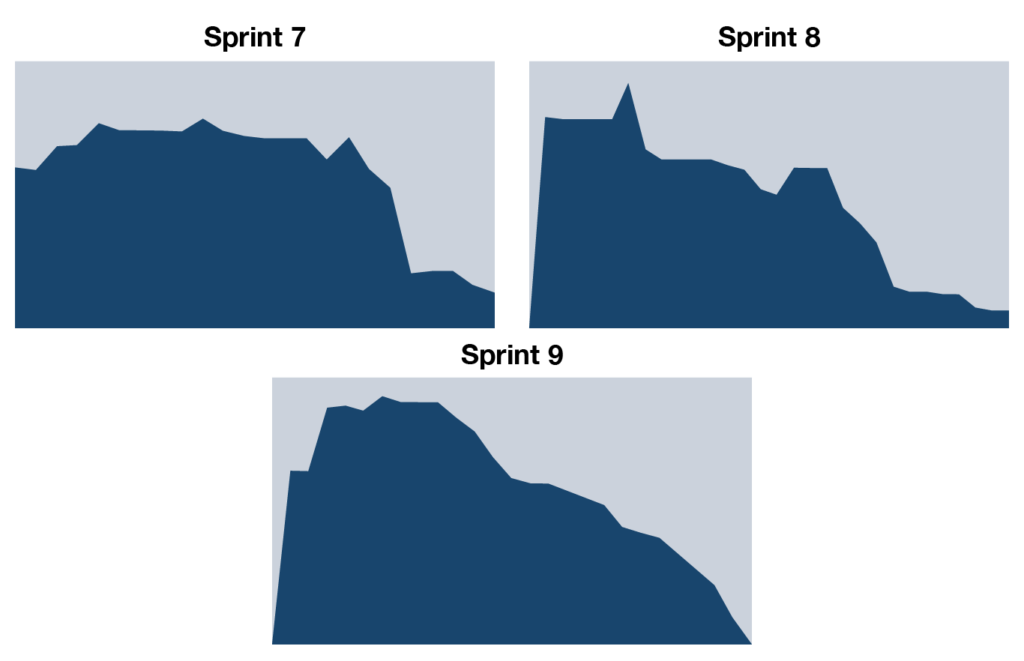

I recently found the sprint burndown charts for Sprints 7, 8, and 9 on an old backup drive. If you already know how to read a sprint burndown chart, skip the next paragraph.

Still here? Ok, here’s how the chart works: On the X-axis (left to right), we plot out the days in the sprint. On the Y-axis (top to bottom), we plot out the total work remaining in a sprint. In a theoretical Sprint, the team would pull work from the product backlog into the sprint during the sprint planning meeting, so on day one of the sprint, we’d see all of the work left to do. As the team begins working and moving things to done, we’d see the remaining work drop day by day, eventually to zero (hypothetically). Since most work done by most scrum teams is not in the ordered, predictable domain, it’s rare to see a perfect burndown, but some teams still find it helpful to have this visualization so that they can make day-to-day decisions on what to do about remaining work vs. remaining time.

Looking back with 15 years of experience, we can see a few clear patterns in the Sprints 7-8:

We were really bad at sprint planning. Notice how the burndowns burn UP in the first few days? That’s because we were still breaking down the work as the sprint got going. This is in large part due to the next pattern:

We were really bad at product backlog refinement. The features that were pulled into a sprint were not being sliced into thin increments of value before we got to sprint planning. So, as a feature got started, we see little bumps up in the curve as the team digs into the details and discovers more work to do. Which leads to the third pattern:

In both charts, the steepest downward slope happens about ⅔ to ¾ of the way into the sprint. This represents the time when people writing code would check it all into the builds and let the people testing the product get going on testing. We would then race to test everything at the end of the sprint and consistently ran out of time to complete that work and the needed bug-fixing it revealed. This work is represented by the “tails” at the end that don’t quite get to zero.

At the end of Sprint 8, I probably could have been forgiven for not feeling like bringing up the same topic again at the retrospective. Here I was, a novice scrummaster, with my very experienced very highly skilled development teams starting to grumble. Maybe they were right — maybe this scrum stuff didn’t fit our context. But I had made a commitment. When we started using Scrum, we all agreed that we would try to do it as close to “by the book” as we could given the organizational constraints we’d face as the first team doing it at our company.

We had committed to each other. The challenge we were facing wasn’t an organizational constraint at all, it was all within the team, and so I tried one more time. I brought it up at the retrospective, and we took one more swing at it, this time trying to get at the real root cause. It wasn’t the testers’ fault for not finishing their work, they were being constrained by big deliveries of code late in the sprint. It wasn’t the developers’ fault for delivering big chunks of code late in the sprint — that’s how they’d been trained to do. The system was delivering exactly the results it was designed to deliver. So we decided to change the system. Here’s the dialog that led to our breakthrough:

“Developer, how often are you checking in code locally?”

“Oh, multiple times per day”

“Well, why can’t we test that code as soon as it’s checked in?”

“Because the feature isn’t done yet, it’s only partially done. If they started testing on that first check-in, it wouldn’t match the feature spec, and they’d start writing bugs against it.”

“What if, every time you checked in code, you just asked yourself ‘how could a tester test what I just checked in’, then tell the tester that?”

“If they agreed to do that, and not test the whole feature before it’s done, that might work.”

This was the change we agreed to try for Sprint 9, and as developer-tester pairs had these discussions during that next sprint, an interesting thing happened. The developers had to think about how to make their very small code check-ins testable. They ended up doing very small, incremental slices of value in order to make that work.

We stumbled upon a pattern that was new to us in 2006, but that most teams today are very familiar with: thin vertical slices. And while we find this pattern so critical to the success of Agile that we’ve written entire courses to teach teams how to do it, that’s not the point of this course. If I had given up on the retrospective after Sprint 8, we never would have had the success that we did in Sprint 9. It was like magic! Everything we committed to in sprint planning was done on the last day of the sprint. And that became the new pattern. We weren’t always perfect, but it became unusual for it to be a problem.

From that day, I’ve been a believer in retrospectives. I knew it could work. And we weren’t even very good at them! We took eight sprints to figure out that core issue. I wish I had had this course back then. I bet using the techniques we’ve learned and are sharing here would have saved our team months of struggling with marginal results from the way we did retrospectives.

But even if you don’t take all the advice in this course, a key lesson was to keep trying, to not give up, and to keep going after the problem in new ways. We stumbled our way to success and, 15 years later, I can confidently tell you what works in a retrospective and what doesn’t.

Today, most teams are working remotely, at least some of the time. Many teams are fully distributed, with everyone working from home, to a degree we’ve never previously experienced.

Good retrospectives are more important than ever. Remote teams rarely improve by accident. Issues are easy to miss and easy to leave unresolved for too long. Commit to regular reflection and experimentation, and you have a chance to become a great remote team.

This course will help you do that reflection and experimentation better than 99.9% of teams in the world. We’re glad you’re here!

This course is divided into four main parts.

Essentials for Facilitating Great Retrospectives introduces 8 key behaviors for great facilitation, whether you’re facilitating a retrospective or another meeting, whether your meeting is virtual or in-person. We cover the behaviors in the context of retrospectives, but you can practice them in any other meeting, as well.

Techniques for Great Virtual Retrospectives shows you how to use those facilitation skills in the context of a virtual retrospective using a video conference tool like Zoom and a visual collaboration tool like Miro. In addition to generally applicable virtual retrospective techniques and tips, you’ll get full walkthroughs of two retrospective formats we like and reusable Miro boards for your own team.

But What If… answers some of the most common challenges we’ve encountered in 20 years of facilitating retrospectives ourselves and teaching others to facilitate well. Struggling to get your team to engage? You’ll find help for this challenge and several others in this section. (Note that this section builds on all the content in the previous two sections. Feel free to look ahead for your top challenge, but expect to need some of the earlier stuff to put the answer into practice.)

Finally, Oh and Also… has bonus material on group decision making, large group facilitation, and an annotated list of our favorite resources for further learning.

Take your time through the course, practicing as you go. The real learning happens when you try it out in your own meetings, not when you read about it. So, be patient with yourself and trust the learning process.

Congratulations on investing in your skills and the health of your team, and enjoy the journey!